performance_test_suite_v1.docx

Purpose

This document is target to define a performance test suite for Tungsten Fabric. A unified and simplified performance test solution/methodology benefits to users for

- Quickly measure the Tungsten Fabric performance by simply environment without and hardware tools/instruments dependency

- Use this test suite as a performance unified checking tool, if you do some performance optimization

- Align the performance checking methodology between developers and maintainers, for patch merging criteria

General

Hardware requirements

1x Intel Xeon server as TF Controller 1

- E.g. Intel® Xeon® Processor E5-2699 v4 +

- 256GB RAM

- 1TB HDD

- 10G/25G/40G Network Interface Controller on NUMA 0

2x Intel Xeon server as TF DUT1 and DUT2

- E.g. Intel® Xeon® Processor E5-2699 v4 +

- 128GB RAM

- 1TB HDD

- 10G/25G/40G Network Interface Controller on NUMA 0

1x internal switch for test network

- Support the bandwidth you want to test 10G/25G/40G

- Optional, if you want to test bonding NIC, switch should support bonding feature, like LACP mode

Software Requirements

BIOS:

- Disable Turbo boost

- Power management is set to “Performance”

- Enable C0/C1

OS: Centos7.5 Minimal

Kernel parameters for isolate cores for testing. E.g.

isolcpus=10-27,38-55,66-83,94-111 nohz_full=10-27,38-55,66-83,94-111 rcu_nocbs=10-27,38-55,66-83,94-111 irqbalance=off mce=ignore_ce intel_pstate=disable iommu=pt intel_iommu=on |

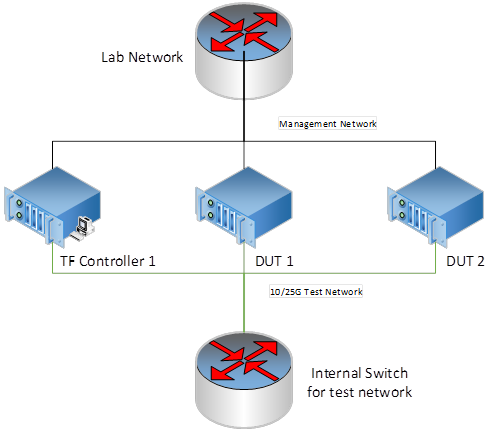

Physical Network Topology for testing

Generally, the physical topology has 2 networks, 1 network is for management and the other network is for test.

Tungsten Fabric Setup

Setup a 3-node Tungsten Fabric environment is fully automated, the playbooks are at https://github.com/Juniper/contrail-ansible-deployer. Please follow up the guide to setup the Tungsten Fabric system. We use OpenStack as the orchestration for test.

The Reference of instance.yaml for 3-node setup

# copy this file to contrail-ansible-deployer/config

provider_config: bms: ssh_pwd: tester ssh_user: root ntpserver: ntp.ubuntu.com domainsuffix: sh.intel.com

instances: bms1: provider: bms ip: 10.67.111.103 roles: openstack: config_database: config: control: analytics_database: analytics: webui: openstack_compute: vrouter: PHYSICAL_INTERFACE: ens802f0 CPU_CORE_MASK: "0xff0" DPDK_UIO_DRIVER: igb_uio HUGE_PAGES: 3000 AGENT_MODE: dpdk bms2: provider: bms ip: 10.67.111.101 roles: openstack_compute: vrouter: PHYSICAL_INTERFACE: enp24s0f0 CPU_CORE_MASK: "0xff0" DPDK_UIO_DRIVER: igb_uio HUGE_PAGES: 10240 AGENT_MODE: dpdk bms3: provider: bms ip: 10.67.111.102 roles: openstack_compute: vrouter: PHYSICAL_INTERFACE: enp24s0f0 CPU_CORE_MASK: "0xff0" DPDK_UIO_DRIVER: igb_uio HUGE_PAGES: 10240 AGENT_MODE: dpdk

contrail_configuration: CLOUD_ORCHESTRATOR: openstack CONTRAIL_VERSION: 5.0.0-0.40-ocata CONTROL_DATA_NET_LIST: 192.168.1.0/24 KEYSTONE_AUTH_HOST: 192.168.1.105 KEYSTONE_AUTH_ADMIN_PASSWORD: c0ntrail123 RABBITMQ_NODE_PORT: 5673 KEYSTONE_AUTH_URL_VERSION: /v3 IPFABRIC_SERVICE_IP: 192.168.1.105 VROUTER_GATEWAY: 192.168.1.100 HTTP_PROXY: "<if you have proxy>" HTTPS_PROXY: "<if you have proxy>" NO_PROXY: "<if you have proxy>" DPDK_UIO_DRIVER: "igb_uio"

# 10.67.111.200 should be a ip address is not used. try to ping it, before you set it kolla_config: kolla_globals: kolla_internal_vip_address: 192.168.1.105 kolla_external_vip_address: 10.67.111.200 contrail_api_interface_address: 192.168.1.103 keepalived_virtual_router_id: "235" enable_haproxy: "yes" enable_ironic: "no" enable_swift: "no" kolla_passwords: keystone_admin_password: c0ntrail123

|

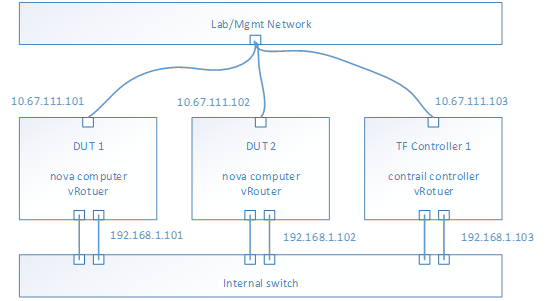

After deployment successful, the topology of network and services looks like,

- E.g. Management Network is 10.67.111.0/24

- E.g. Test network is 192.168.1.0/24

- To check the test network by ping each IP address from each host

- Nova computer service should be running on each DUT host

- vRouter service should be running on each host

- Contrail controller services should be running on TF Controller host

- From TF 5.0, all the services are isolated into docker containers

- Use docker ps to check each service (kolla/openstack and contrail) running status

- Use contrail-status to check the contrail services healthy

- Optional, if you want to test bond driver, configure the bond device in the host OS and modify the physical interface field to bond device in instances.yaml.

Performance Test Image and Test Tools Configuration

The image for testing is based on the basic cloud image, e.g. https://cloud-images.ubuntu.com/xenial/current/xenial-server-cloudimg-amd64-disk1.img

# need to remove root pwd in the image sudo apt-get install qemu-utils sudo modprobe nbd max_part=8 wget --timestamping --tries=1 https://cloud-images.ubuntu.com/xenial/current/xenial-server-cloudimg-amd64-disk1.img sudo qemu-nbd --connect=/dev/nbd0 xenial-server-cloudimg-amd64-disk1.img sudo mount /dev/nbd0p1 /mnt/ sudo sed -i "s/root:x:/root::/" /mnt/etc/passwd sudo umount /mnt sudo qemu-nbd --disconnect /dev/nbd0 |

Configure Flavor

For performance testing, suggest to create a flavor has features

- 5 vCPU

- 6GB memory

- 20G HDD

- Metadata with hugepage enabled

- Hw:large

Configure Network/Subnetwork

For performance testing, suggest to create 4 networks, because 4 ports will be used in VPPV test. Such as,

- Test-network-1, 1.1.1.0/24

- Test-network-1, 2.2.2.0/24

- Test-network-1, 3.3.3.0/24

- Test-network-1, 4.4.4.0/24